Security Expert Fired by Figure AI Warns: These Robots Can Harm Humans

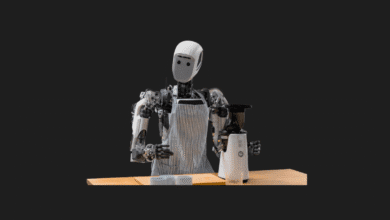

Figure AI, known for its humanoid robots, is at the center of a significant lawsuit. The company’s former product safety expert, Robert Gruendel, alleges he was fired for raising concerns that these robots could cause serious injury to humans.

The Growing Danger of Humanoid Robotics

The explosion in the Artificial Intelligence (AI) field, coupled with significant advancements in robotics, has suddenly made humanoid robots—once seen only in science fiction—a much more tangible reality. Today, these robots are slowly being integrated into the workforce, and they are expected to enter homes as assistants in the very near future. However, some experts are warning that this could be quite dangerous for humans. Even an ordinary software glitch in these robots could result in serious human injury.

This destructive potential of robots is the subject of a new lawsuit filed this week. The former security expert at Figure AI, one of the leading companies producing notable humanoid robots, is suing the company, claiming he was fired for voicing security concerns.

Figure AI Robots Could Severely Injure a Human If Malfunctioning

According to statements in the court filing, Robert Gruendel, the company’s former Head of Product Safety, reported during impact and speed tests in July that the robots moved at “superhuman speeds” and generated forces reaching “twenty times the pain threshold.” These values are stated to be more than double the force required to fracture an adult human skull.

The complaint also details that during one test, a malfunctioning robot tore a approximately 6-millimeter gash in a steel refrigerator door, with a human employee standing right beside it. Following these findings, Gruendel prepared a comprehensive safety roadmap within the company. The document detailed necessary sensor limitations, force ceilings, software safety protocols, and testing procedures to ensure the robots’ safe integration into the workplace.

However, Gruendel claims his security plan was “significantly pruned” by company management, and critical measures were disabled because they supposedly “slowed down product development.”

When Gruendel believed the security vulnerabilities had reached a level that could mislead investors, he escalated the matter directly to CEO Brett Adcock and Chief Engineer Kyle Edelberg. Gruendel alleges these warnings were dismissed as unimportant, and he was treated adversely for making the job difficult. Indeed, Gruendel was abruptly terminated just days after sending his clearest, most documented objections to management.

Figure AI rejects all allegations. In a statement, the company claims Gruendel was fired due to “poor performance” and asserts that the accusations in the lawsuit are baseless claims that will be easily refuted in court.

This lawsuit against Figure AI brings to mind a video that went viral on social media a few months ago showing a “glitching robot.” The video, in which a robot with a software issue suddenly thrashed uncontrollably, destroying its surroundings, thoroughly spooked people. A similar malfunction by a much larger and more powerful robot used in factories or homes could lead to far more terrifying consequences. For this reason, Gruendel’s warnings do not seem unfounded.